What is the Lightrun MCP?🔗

The Lightrun MCP is built on the Model Context Protocol (MCP), an open standard that defines how AI tools discover capabilities, authenticate, and invoke actions on external systems. By using MCP, Lightrun exposes its production-safe runtime debugging APIs in a structured and secure way, allowing AI agents to go beyond static code analysis and reason about real execution behavior, state, and control flow.

Starting with Lightrun version 1.75, Lightrun provides a hosted MCP server that enables AI-powered coding assistants—such as Cursor, Gemini Code Assist, or GitHub Copilot—to interact directly with live application runtimes whenever real-time runtime data is required. This integration gives AI tools first-class access to runtime insights, helping developers investigate issues that are difficult or impossible to reproduce locally. The Lightrun MCP communicates using a streamable HTTP-based protocol over HTTPS, following security best practices to protect data in transit between MCP clients and the server.

In pre-production, developers use Lightrun MCP to inspect logic flow in real time, verify function arguments, and capture context in rare edge cases. In runtime environments, Lightrun MCP helps investigate issues that cannot be reproduced locally, capture conditional state, and identify memory leaks or resource misuse.

Lightrun MCP architecture🔗

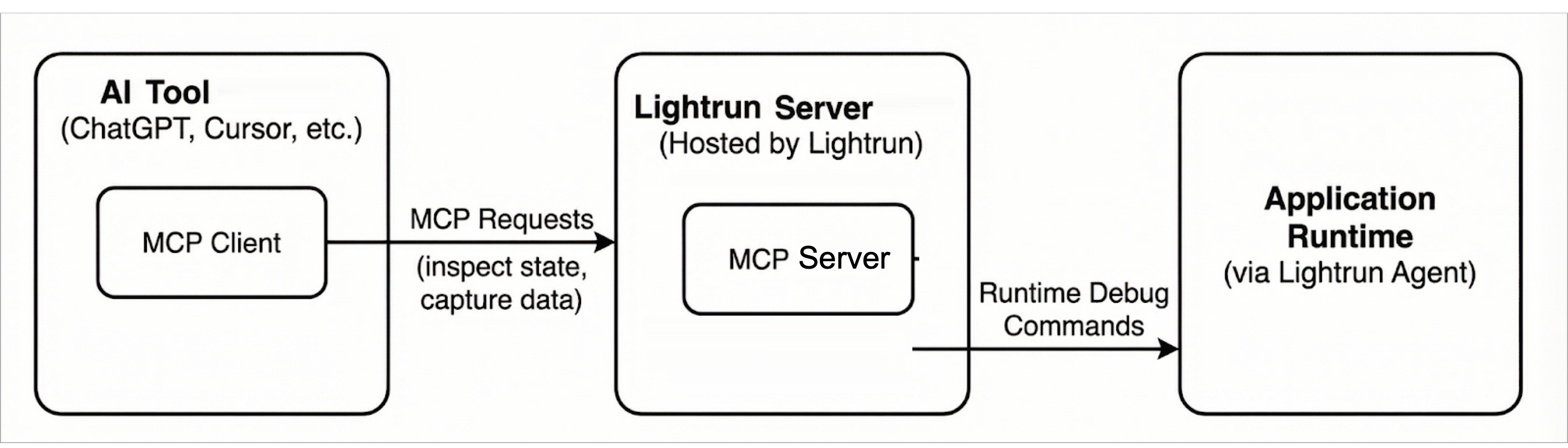

The Lightrun MCP architecture follows the standard MCP client–server model and consists of the following components:

MCP Client🔗

The MCP client is embedded in AI coding tools (such as Cursor). It issues MCP requests to inspect application state and request runtime data.

MCP Server (Lightrun MCP Server)🔗

The Lightrun MCP Server is a component of the Lightrun Server and implements the MCP interface, translating MCP requests into Lightrun API calls.

In this architecture, an AI coding tool acts as an MCP client and communicates with the Lightrun Server using MCP. The server mediates all interactions with the application runtime, ensuring that debugging and observability operations are executed safely and without requiring code changes or redeployments.

The following diagram shows the overall Lightrun MCP architecture and the interaction between MCP clients, the Lightrun Server, and the application runtime.

Security and privacy🔗

Data protection🔗

MCP results are subject to PII redaction based on your PII redaction configuration.

Authentication🔗

Authentication is required to access Lightrun MCP. MCP clients authenticate using Lightrun Server credentials, and access is governed by existing Lightrun roles and access permissions. Authorization is enforced on tool usage and not on the connection to the Lightrun MCP Server.

Rules and limits🔗

The following rules and limits apply to the results returned to AI agents when using Lightrun MCP:

- A maximum of 50 runtime inspection hits is allowed per request. The default value is 1.

- Inspected object are limited to a depth of three nesting levels.

- The default timeout is 60 seconds with a maximum timeout of 10 minutes.

- Quota limits cannot be ignored or bypassed.

Legal disclaimer🔗

The Lightrun MCP exposes application code and runtime data to MCP clients for inspection and debugging. Avoid sharing sensitive information with MCP clients you do not trust.

Getting started🔗

To start using Lightrun MCP, read the following topics:

- MCP quickstart guide: Connect an AI assistant to Lightrun MCP and perform an initial runtime inspection.

- Lightrun MCP tools: Reference documentation for the tools exposed by Lightrun MCP.